Cross-Domain Autonomous Driving Perception using Contrastive Appearance Adaptation

Ziqiang Zheng1 Yingshu Chen1 Binh-Son Hua2,3 Yang Wu4 Sai-Kit Yeung1

1The Hong Kong University of Science and Technology

2Trinity College Dublin

3VinAI Research

4Tencent AI Lab

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) 2023

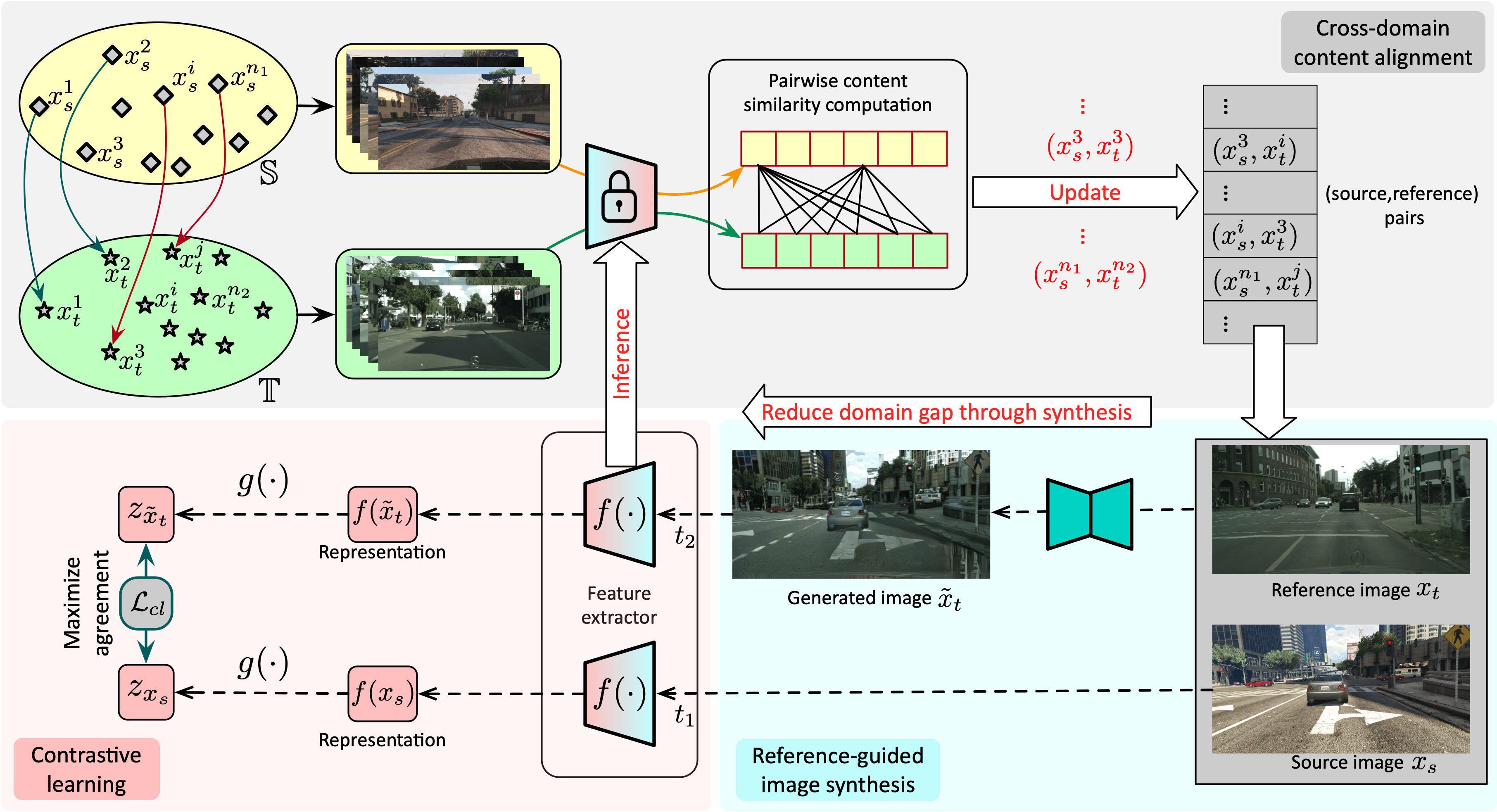

Framework Overview. The core of our image-level domain adaptation is a cycle of three mutual-beneficial modules: cross-domain content alignment (CDCA), reference-guided image synthesis and contrastive learning. CDCA uses the domain-invariant feature extractor learned by contrastive learning to construct source-reference image pairs for training the reference-guided image synthesis module to produce target-like images. The source and target-like images can be regarded as augmented views for contrastive learning to improve the domain-invariant feature extractor. Final target-like images and source labels can be adopted for downstream perception tasks.

Citation

@inproceedings{zheng2023compuda,

title={Cross-Domain Autonomous Driving Perception using Contrastive Appearance Adaptation},

author={Ziqiang Zheng and Yingshu Chen and Binh-Son Hua and Yang Wu and Sai-Kit Yeung},

booktitle={2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year={2023}

organization={IEEE}

}